Can Machines Read Between the Lines?

RebelsNLU: Reading between the Lines for Natural Language Understanding

Associate Professor:INOUE Naoya

E-mail:

[Research areas]

Natural Language Processing, Language Understanding, Commonsense Reasoning

[Keywords]

Language Model, Deep Learning, AI, Explainability, Data Science, Inference, Argumentation Analysis

Skills and background we are looking for in prospective students

Required: Passion for creating machines that can understand human language. Preferred: (a) Basic knowledge about linear algebra, probability, statistics, and algorithms and (b) experience in programming.

What you can expect to learn in this laboratory

In the lab, you will actively discuss with lab members, come up with innovative ideas, program these ideas as a computational model, and quantitatively evaluate these ideas. From a number of trials, someday you will obtain interesting insights. We then write and submit a paper to academic conferences to polish the idea more, communicating with researchers worldwide. Through these activities, you can acquire so many general-purpose skills, let alone expertise in natural language processing research and its related areas. You will know how to think critically, how to dive into unknown fields, how to plan, how to present your work, how to program, and how to work as a team.

【Job category of graduates】Academia, Information Technology

Research outline

We study how to create machines that can understand our human language. Our research field is called Natural Language Processing (NLP), and a wide variety of research topics have been explored in NLP. Our focus is to equip machines with an ability to “infer” something--making implicit things explicit and reading between the lines. The examples of our research topics are the following:

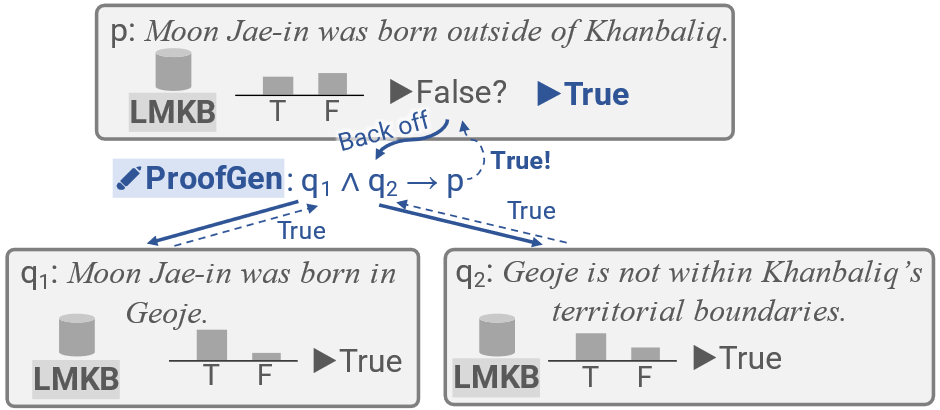

1. Self-aware Language Models

When a Large Language Model (LLM) is posed with questions that cannot be answered from its training data, can the LLM recognize its own lack of knowledge? We are pursuing research to advance LLMs by incorporating a self-awareness mechanism into them. We successfully enabled an LLM to activate a deliberation circuit when it became aware of its ignorance, resulting in more accurate question responses [1].

Figure 1: Question Answering model with deliberative thinking [1]

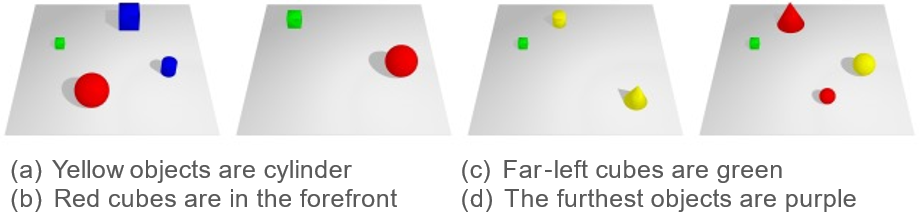

2. Vision-integrated LLMs Capable of Complex Reasoning

Can Language-and-Vision-Integrated LLMs (VLLMs) answer complex questions about images? We are conducting research to provide VLLMs with advanced reasoning capabilities. We developed a dataset of identifying common patterns across multiple images, evaluated the performance of state-of-the-art VLLMs, and identified current challenges [2]

Figure 2: Benchmark of visual inductive reasoning [2]

3. In-context Learning

It is known that LLMs have the ability to learn new tasks through in-context learning via input prompts, but what specific mechanisms make this process possible? We have comprehensively examined the behavior of LLMs when they learn the format of input and output through in-context learning.

4. Argumentation Analysis and Assessment

We are working on computational models that can analyze the logical structure in argumentative texts such as debate speeches and essays, evaluate the validity of the logic, and suggest weaknesses and improvements to learners. We constructed a dataset to evaluate the performance of such models [3].

In addition to the above themes, we conduct thorough research on "reasoning" in various fields such as inference in action planning. Our lab embarked on an exhilarating journey on 4/1/2022, and we're crafting everything from the ground up! If these topics ignite your curiosity, be a part of our adventure. Your energy and passion are exactly what we need to turbocharge our lab's journey into the unknown. Join us, and let's make waves together! We very much welcome those who wish to pursue a doctoral degree to deeply explore this area.

Key publications

- Inoue et al. Can self-awareness improve the reliability of LM as KB? (in Japanese). In the 30th Annual Conference of the Association for Natural Language Processing, 4 pages, 2024.

- Shi et al. Find-the-Common: Benchmarking Inductive Reasoning Ability on Vision-Language Models. In the 30th Annual Conference of the Association for Natural Language Processing, 4 pages, 2024.

- Robbani et al. Templates for Fallacious Arguments Towards Deeper Logical Error Comprehension. In the 30th Annual Conference of the Association for Natural Language Processing, 4 pages, 2024.

Equipment

CPU/GPU Cluster Machines

Teaching policy

I respect you and will do the best I can to bring the best out of you. I will help you to be an independent researcher--to plan a research project truly enjoyable to you and make progress on the project yourself. I also encourage you to present your work at academic conferences and to collaborate with researchers worldwide to be a global researcher. Our lab will have a wide variety of study/reading groups and weekly meetings. We communicate in English for our lab to be a global environment.

[研究室HP] URL:https://rebelsnlu.super.site/