Multimodal Computational Modeling for Recognizing and Generating Social Signals from Interaction and Human Behaviors

Social Signal and Multimodal Interaction Laboratory

Professor:OKADA Shogo

E-mail:

[Research areas]

Multimodal interaction, Machine learning, Social Signal Processing

[Keywords]

Human behavior analysis. Human dynamics, Affective computing

Skills and background we are looking for in prospective students

It is desirable to have experience of programing, machine learning, multimedia (audio, vision, language ) processing.

What you can expect to learn in this laboratory

Machine learning, multimedia (audio, vision, language) processing for social signal processing and fundamental skills which are required for a data scientist.

【Job category of graduates】ICT companies, IT consulting companies

Research outline

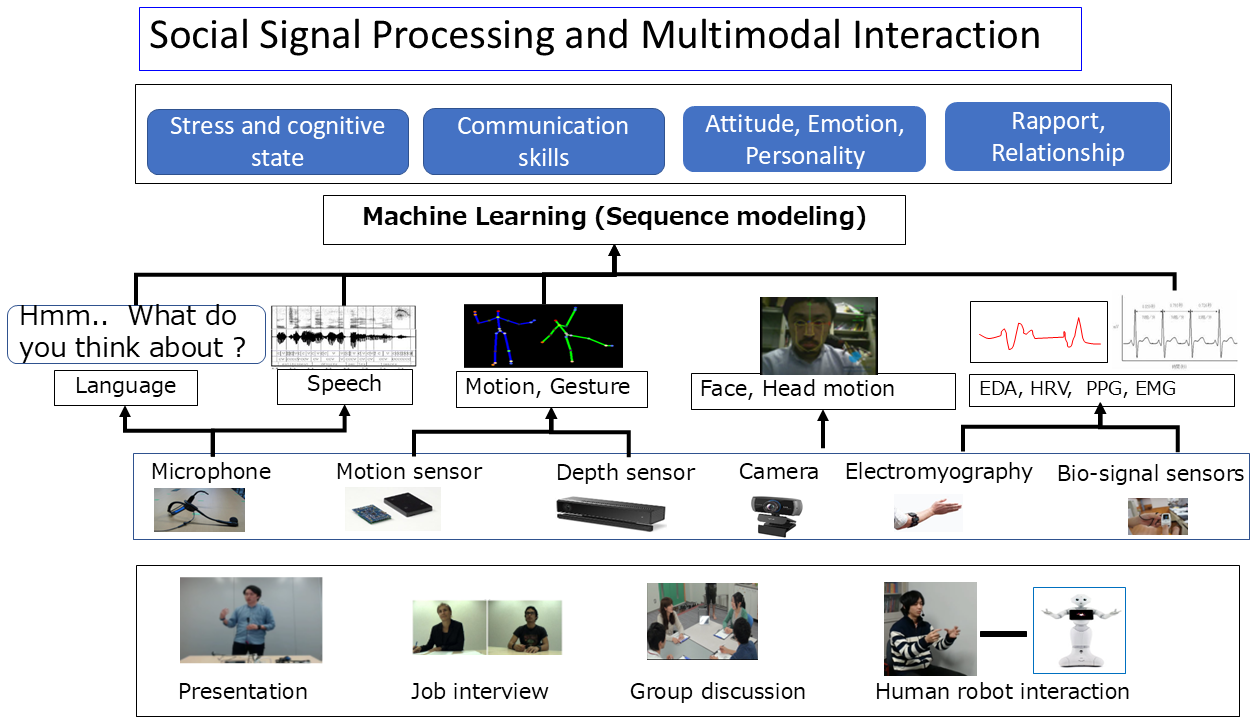

Multimodal Interaction and Social Signal Processing:

Multimodal Interaction and Social Signal Processing:

Our researches focus on building computational model of multimodal social signals ( speech, gaze, gesture and so on. ) by using speech signal processing, image processing, motion sensor processing and pattern recognition techniques. Our research question is how these social signal patterns influence high level output and tacit knowledge such as output after consensus building, communication skills and explanation skills. These modeling techniques can be also used to develop a sensing module for conversational agents.

Machine Learning and Data Mining:

Phenomenon of multimodal interaction and human dynamics are observed as continuous multi-dimensional time-series data from multiple sensors. Machine learning techniques are important to build recognition model from these multidimensional time-series data set.

To discover the structure of these patterns, Data mining algorithm are also useful. In particular, we focus on developing time- series clustering, multidimensional motif discovery and change point detection algorithm and applied to find various patterns.

Key publications

- Shogo Okada et al, Modeling Dyadic and Group Impressions with Intermodal and Interperson Features. ACM Transactions on. Multimedia Computing, Communications, and Applications (TOMM) 15(1s): 13:1-13:30 (2019)

- Shun Katada, Shogo Okada and Kazunori Komatani, Effects of Physiological Signals in Different Types of Multimodal Sentiment Estimation, IEEE Transactions on Affective Computing, vol. 14, no. 3, pp. 2443-2457, 1 (2023)

- Fuminori Nagasawa, Shogo Okada, et al, Adaptive Interview Strategy Based on Interviewee’s Speaking Willingness Recognition for Interview Robots, IEEE Transactions on Affective Computing, Early Access (2023)

Equipment

Humanoid robot, Motion sensors, EMG sensor,

Microphone array, Computation infrastructure (GPU, CPU).

Teaching policy

Scientific research methodology, Critical thinking, Scientific communication

[Website] URL : https://www.jaist.ac.jp/~okada-s/