Robotics Laboratory

Humanoid Robot

Imitation Learning under POMDP in Assistive Kitchen System

Introduction

In general context of learning, trying to copy a movement or a certain task demonstrated physically by others is a common way for humans to accomplish similar task. In learning how to dance by imitation, human tries to match their limbs configurations to others to get the same posture. On the other hand, in case of learning how to pour a water properly from a glass to another by imitation, similar limbs configuration sometimes not a big issue, as long as the water is properly poured.

Two different kinds of imitation learning are well-known in robotics, namely behavioral-based and goal-directed based imitation learning. Behavioral-based Imitation Learning is similar with learning how to dance, which means the imitator's main objective is to get the same posture and behavior as the demonstrator. Goal-directed imitation learning only concerns about how to get the same outcome of a certain task demonstrated. Due to the fact that it concerns about the physical link configuration in an articulated robot, it has a very high complexity especially in a redundant manipulator. Goal-directed imitation learning is useful in a case where we need a manipulator to accomplish a task imitated by human or other robot. It focuses on getting things done instead of the manipulator posture. Using a goal-directed imitation learning algorithm, we don't need to program the robot explicitly to do a certain task performed by others.

To achieve a solid-structured and organized machine learning, we need a mathematical framework of learning environment for the agent(decision maker). Markov models are popular mathematical tools used widely in machine learning research. Comprises four different types, Markov Chain(MC), Hidden Markov Model(HMM), Markov Decision Process(MDP), and Partially Observable Markov Decision Process(POMDP), each of them provides different properties under particular conditions to represent the learning environment in machine learning algorithm. Since action transitions are not controllable in both markov chain and HMM, MDP and POMDP provides a controllable action transition property for learning environment representation. MDP describes a deterministic relation between an agent and the environment. Analogous to markov chain in terms of the agent's current state certainty, the agent in MDP representation knows exactly its current state. Meanwhile, POMDP is a stochastic representation of learning environment with addition of controllable action transitions, and also deals with uncertainty elements of the agent's current state.

Since a more realistic environment representation deals with uncertainty, a stochastic representation is preferable, which makes POMDP a better representation as a learning environment. Despite of the natural representation, it is intractable to get an exact POMDP solution. There has been vast development of practical POMDPs algorithm in the recent years. Value iteration based on the discretization of each continuous state space is the common way to solve POMDP. It is practical if the number of states and horizon is relatively small. When it comes to a large amount of states and horizon, "Curse of Dimensionality" occurs, when the computational complexity increases drastically with the dimension of belief space, and it becomes intractable to solve. This is a dilemma where a fine discretization would lead to a "Curse of Dimensionality" and poor discretization would lead to poor representation of the state space.

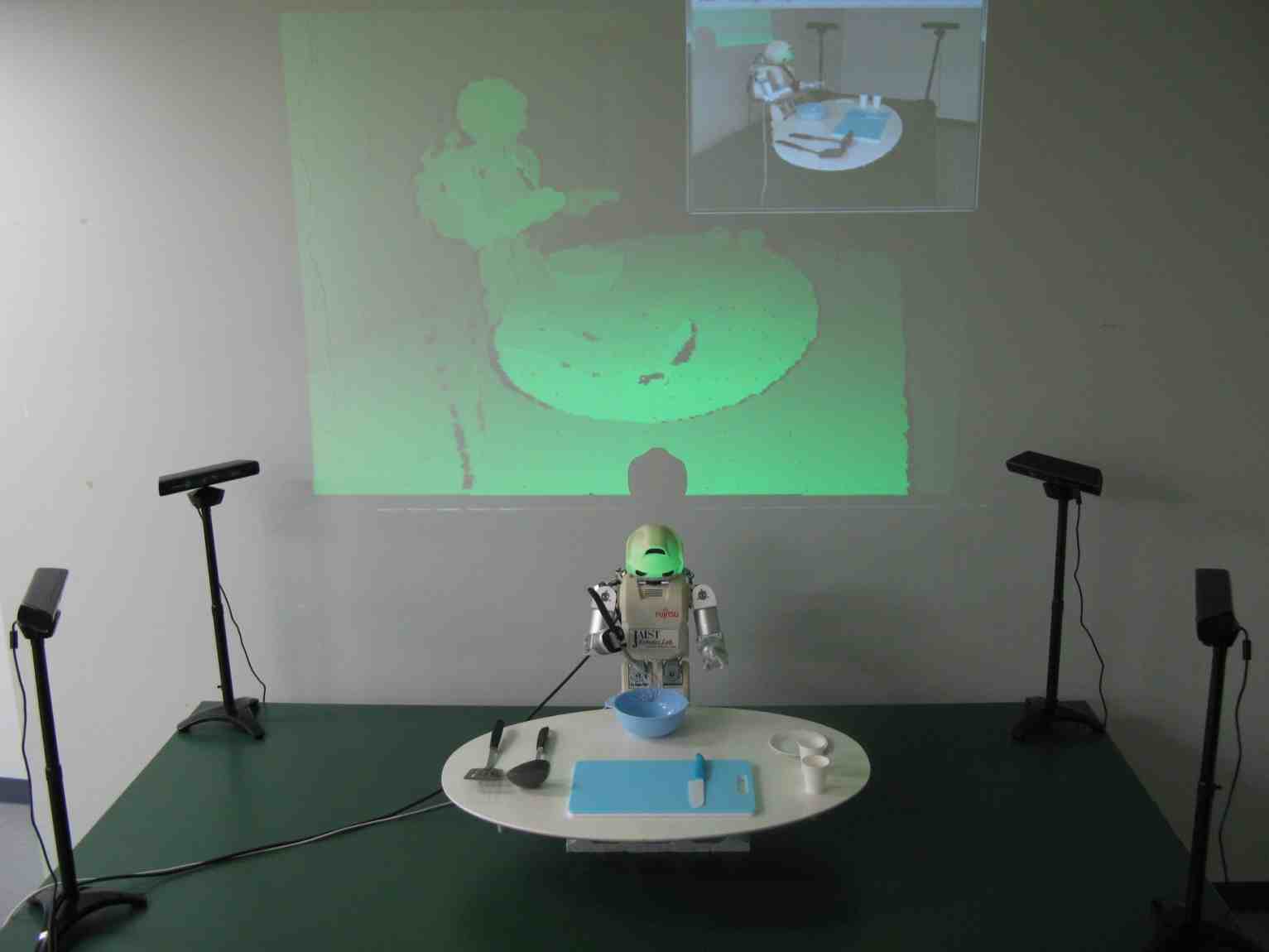

To illustrate the application of the proposed learning algorithm, we introduce an Assistive Kitchen System. The elements of Assistive Kitchen System basically consists of a learning agent(decision maker), things to interact with, and couple of sensors to gather data as the input for the agent. The agent can be either a humanoid robot or a manipulator, with the same purpose: to assist the human in the environment by manipulating objects using imitation learning.

Our research offers one alternative way to deal with this kind of situation. To implement a goal-directed imitation learning in an assistive kitchen system, we started with the learning by imitation. Using POMDP as the environment representation, we developed a practical yet easy-to-implement imitation learning algorithm that deals with continuous states focusing on one specific task. We performed numerical and 3D simulations to verify our algorithm.